The role of big data in cloud computing

Автор: Убайдуллаев Хусанбой Илхомжон угли

Рубрика: Технические средства обучения

Опубликовано в Образование и воспитание №1 (1) февраль 2015 г.

Дата публикации: 05.02.2015

Статья просмотрена: 72 раза

Библиографическое описание:

Убайдуллаев, Х. И. The role of big data in cloud computing / Х. И. Убайдуллаев. — Текст : непосредственный // Образование и воспитание. — 2015. — № 1 (1). — С. 73-77. — URL: https://moluch.ru/th/4/archive/4/25/ (дата обращения: 24.04.2024).

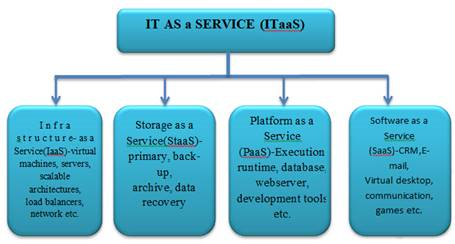

Big data management is becoming crucial nowadays because of the evolution of large number of social networking websites, powerful mobile devices, sensors, and cloud computing. This paper deals with possibilities, vulnerabilities, problems, solutions and advantages of maintaining the big data in the area of cloud computing. Cloud computing is nothing but information technology (IT) as a service. The name cloud computing was inspired by the cloud symbol that's often used to represent the Internet in flowcharts and diagrams. It develops nothing new. It provides IT services via internet to number of clients at low costs. Nowadays all the IT companies and their clients are working with enormous amounts of data and the data size is growing rapidly from time to time as new data is created. This is technically termed as big data, maintenance of which became inevitable now. For this, cloud computing is a viable solution. Data mining is the concept connected with both big data and cloud computing. In the following sections these three topics and their inter-relation is thoroughly examined.

Through cloud computing, the fundamental services offered are the following.

Figure 1. The majority of services offered by Cloud Computing in order

- Software as a Service(SaaS)-It is the king of all the services which delivers the application via internet or intranet.

It contains single instance of software running in cloud environment and provides multitenancy. For example GoogleApps including Gmail, Google Calendar, Docs and sites.

- Platform as a Service(PaaS)-delivers a platform or solution stack on a cloud infrastructure integrating an OS, middleware, application software and even a development environment. May be encapsulated and and served by an Application Program Interface(API).

e.g., GoogleApp Engine.

- Infrastructure as a Service(IaaS)-delivers computer infrastructure in a virtualized environment, basic storage and computing capabilities for handling workloads. Services include servers, switches, routers and so on.

e.g., Joyent which supports Facebook on it's applications developer program and produce a line of virtualized servers to provide on-demand infrastructure.

- Storage as a Service(StaaS)-provides services like back-up and data recovery.

e.g., Amazon provides Dynamo DB service which uses Solid State Drives(SSD) for big data storage. Cloud services may be provided at any of the traditional layers from hardware to applications. By taking visibility into account, clouds are classified as four types.

- public clouds-can provide large number of benefits to their customers with the ability to scale up and down on demand run by third parties, often hosted away from customer premises.

- private clouds-Services are provided to only trusted users and enterprises and intended for the exclusive use of one client.

- community clouds-The information is shared among organizations on the cloud managed by themselves or a third party and hosted by service provider.

Merits and demerits of cloud computing, the following:

Merits:

- for individuals cloud computing saves money on hardware.

- for enterprises, less expenditure for onsite hardware, capital amount, administration, and maintenance is needed and it is scalable to handle variable business needs.

- for the environment, electricity costs and heat release of local servers is saved. Virtual cloud servers can save on electricity by sharing.

Demerits:

- monthly fees

- business data is stored off-site.

- data is unsafe if our service provider goes out of business. Encoding of data transmission and storage needs are taken into consideration. Then the services will be limited.

- training is needed for programmers with cloud standards.

Challenges and vulnerabilities with big data

A) The existent cloud infrastructure may not support big data:

The traditional clouds must be flavoured with additional features in order to solve the complex problems associated with big data. The research is still in early stage to support big data in the clouds.

Example Applications:

Table 1

Application areas, the big data used in, algorithms applied, and computing concepts are summarized

|

Application |

Bigdate |

Algorithms |

Computer Style |

|

Scientific study(e.g., EarthQuake Study) |

GrounModel |

EartchQuakeSimlation, Thermal Conduction |

HPC |

|

Internet Library Search |

Historic Web Snapshots |

DataMining |

MapReduce |

|

Virtual World Analysis |

Virtual World Database |

DataMining |

TBD |

|

Language Recognition |

Text Corpuses |

Speech Recognition |

MapReduce and HPC |

B) The storage may not be sufficient for handling big data:

The conventional structures may not support big data. The scalable architectures are one solution to uncover this problem.

D) Though we efficiently maintain big data, the provision of security is difficult in cloud computing environment. Security now a days is a big problem as the existing internet already had been suffering from cracking. China and India are the leading vulnerable countries in the world due to cracking.

E) Maintaining the balance between ethical values and big data management is quite hard to achieve.

The very idea of handling big data itself invites problems of ethics and it is even hard to accomplish the balance between ethics and big data maintenance.

F) Highscale data may lead to global warming.

As the globally generated data per year increases by 40 %, many companies are looking for a better solution. To some extent, cloud computing is a solution to this problem.

G) big data recovery is also a problem.

Handling big data is itself a big problem. It needs high storage capacity. If we fail to save this big data in the current location where there is the infrastructure to store big data, the recovery can become a big problem without sufficient back-up mechanisms.

H) managing large data files, managing many files, simultaneous access to files, long term data management are the problems with big data processing.

I) industries rely on simulated data-driven environments rather than physical experiments.

J) Existing wire and wireless network and frequency management also need diverse techniques for handling complicated and various Big Data.

Amazon is a leader in big data and analytics. Clustercompute is Amazon's supercomputer to handle large amounts of data.

Advantages of cloud computing with big data

- Heavy cost reduction

«Big Data: The next frontier for innovation, competition, and productivity» of McKinsey [3] describes monthly 30 billion contents are shared through Facebook, and IT expenses have increased 5 %. It also includes that Big Data can give 330 billion dollars value production possibility in the area of USA medicine (more than twice of medical expenses of Spain yearly), and 250 billion euro reduction in European public service area (equals the amount of GDP of Greece), and in USA until 2018, 140~190 thousand analysis specialists and 1.5 million data administers can be needed.

- Multitenancy

The cloud service providers can give benefit to number of clients with low cost and the clients feel as if they have their own infrastructure, database, middleware and other facilities.

- Business market creation

A big business market can be created through cloud computing and it's service management

- Speed in big data processing

Massive volumes of data can be processed at higher speed in cloud environment. Analytics can help in achieving this task.

VI. Layered structure of big data as a service

In the cloud computing environment which provides big data as a service, the structure of the network can be depicted in the Figure 2. At the bottom layer, it is the cloud infrastructure at the lowest level of abstraction providing computing and storage as services. In the next layer upwards it is data fabric service provided by service providers (Figure 2). Certain database management services like data aggregation may be considered as data fabric services. At next higher level, a service provider, in addition to data management, provides execution environment. At the higher level abstraction it is the predictive analytics S/W in the topmost layer as a service. This layer executes scripts, queries and generates reports, visualizations.

|

|

|

|

|

|

|

Figure 2. Big Data as a service (approaching by layer)

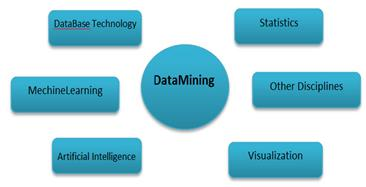

VIII. Application of data mining algorithms

Figure 3.Applications of data mining

The below algorithms in data mining shown in Table 2 are conventional and also applicable to big data mining but big data takes large volumes of data, there is the need for parallelization of above traditional data mining algorithms. The strategies for parallelizing data mining algorithms can be in three ways.

A) Independent Search:

In this method, each processor can access the whole dataset. But each one starts searching from different location in the search space. The starting point may be chosen randomly.

B) Parallelize a sequential data mining algorithm:

In this method, the intermediate results are partitioned across processors. The local concepts are checked for global correctness by taking the entire dataset.

C) Replicate a sequential data mining algorithm:

In this method each processor is allocated to a partition of the entire dataset. All processors work on their local partitions, execute sequential algorithm, and get result which is only locally correct. Finally, the processors exchange their results to check whether they are globally correct or not.

Deployment of some parallel strategies for the mining tasks is the greatest challenge in this area. Protecting privacy of data owners and conducting data mining tasks over federated clouds is another big problem.

IX. Latest realtime solutions to big data problems

In order to reduce delays, data is not moved to and fro for computation and processing, instead analytics and process are moved to data.

Here is one example cloud infrastructure which supports mobile access to file servers, on-demand access, and centralized control. For small business Windows File Server has served a lot in local area networks for using information in certain files by file sharing and file locking facilities for accessing and security purposes respectively. But this is only for users who are locally connected. When remote user needs to use the local file, instead of connecting to Virtual Private Networks(VPN) to access the local file for just modifying and viewing which is not so easy, we can use a hybrid local storage and cloud storage solution. It is of two variations.

- Gladinet cloud [10] with the File Server Agent Component

- Gladinet cloud Enterprise [10] without the File Server Agent Component

After installing File Server Agent Component, our local file can be exposed to online access. Distributed locking is provided with group policy through which local word files and excel files lock automatically on editing.

In 2012,

IBM provided:

- data security solutions for Hadoop and bigdata environments, a special masking scheme for sensitive data, support for latest Key Management Interoperability(KMIP) protocol.

- prevention of unauthorized access and protection against the latest threats with advanced analytics.

- QRadar security intelligence platform to collect, store, analyze and retrieve information on a log, threat, vulnerability and security from distributed locations.

- The Cisco Global Infrastructure Services team provided -

- Offering a cloud storage service to any employee who needs to store large unstructured data, such as video.

- A storage cloud service called S-Cloud to store, manage, and protect globally distributed, unstructured content.

CloudSigma built a tiered architecture with Solid State Drives(SSDs) and magnetic storage, for storing large volumes of data.

Some datacenters like Cloud datacenter for IBM, is analyzing the log files before dropping them to improve problem anticipation and overall business efficiency of the datacenter.

According to Intersect360 research study, High Performance Computing(HPC) users and big data applications prefer private clouds rather than public clouds as private cloud deployment is well suited to big data computation.

Aspera on Demand delivers big data scale-out transfer capacity.

X. Recent trends

Volume, variety, velocity, veracity (The term introduced by IBM means reality and trustworthy) are four characteristics of big data. The first two are discussed so far to some extent. In this section we focus on later two.

Nowadays there is a vast change in human life. From 3G, 4G communications, cell phones and wallets the trend is towards smart phones by which we can do the following tasks.

- making phone calls

- sending texts and e-mails

- sending photos

- tracking locations

- checking bank account balances

- Sending secure payments for consumer transactions and so on.

For example, we can do almost all the business limited to individuals. For organizations and big data centers, handling large volumes of data, to facilitate this big data at high velocities, there should be network throughput. Several application areas of big velocity data are, financial services, stock brokerage, weather tracking, movies/entertainment, and on-line retail. Unfortunately, most big data analytics are available in static data environment. But, pertaining to real time events, so far there are no sufficient analytics to support big data at high velocities or moving big data. Still research is going on towards moving big data strategies and companies depend on these studies to gain competitive advantage for their existence as big data is given that much importance today.

Conclusion

There is a huge movement towards gaining advantage from cloud computing which can provide efficiency, speed and flexibility to process big data. Another technical term called big data analytics as a service is created in this competitive real world, giving importance to analytics. Even small and medium scale business organizations which have not leveraged their data to a certain volume, are also using the big data analytics. Organisations realized that the users are interested in insights of data rather than only data. As big data analytics provides insights, it can be future topic for enhancement. Certainly cloud computing is going to be a big deal in handling massive amounts of data and data analytics.

References:

1. D. W. Hong, «Technical Trend of the Searchable Encryption system», Electronic and Telecommunications Trends, vol. 23, no. 4, (2008).

3. «Big Data: The next frontier for innovation, competition, and productivity»

4. S. K. Eun, «Cloud Computing Security Technology Trends», Review Security and Cryptology, vol. 20, no.2

5. Building Data Mining Applications for CRM [Paperback] by Alex Berson (Author), Stephen J.Smith (Author), Berson (Author), Kurt Thearling (Author).

6. Data-Driven Marketing: The 15 Metrics Everyone in... by Mark Jeffery.

7. Cloud Computing with the Windows Azure Platform By Roger Jennings.

8. Moving To The Cloud: Developing Apps in the New World of Cloud Computing, By Dinkar Sitaram, Geetha Manjunath.

9. The Cloud Computing Handbook — Everything You Need to Know about Cloud Computing, By Todd Arias Gladinet, www.gladinet.com.

10. Eaton, Deroos, Deutsch, Lapis, & Zikopoulos. (2012). Understanding big data: Analytics for enterprise class Hadoop and streaming data. New York: McGraw-Hill.

Похожие статьи

Transfering of military technologies into civil production as one of the...

HPC, IBM, USA, API, TBD, SSD, KMIP, GDP, CRM, VIII. Ensuring hack wireless networks | Статья в журнале... Employees Cisco Scott Fluhrer, Itsik Mantin...

Использование чат-ботов в различных сферах повседневной жизни

Это приложение, запущенное со стороны пользователя и осуществляет отправку запросов к Telegram Bot API. Bot API представляет из себя HTTP-интерфейс для работы с ботами.

IBM’s Watson.

How data analysis technologies can help develop the purpose of...

IBM estimates that each day 2.5 quintillion bytes of data are created or replicated. That´s the equivalent of a million hard drives filling up with data every hour.

It is because of that we are going to quickly analyze the top ten of the most valuable companies (Market value) in the USA, China, and...

Software testing – overview | Статья в журнале «Молодой ученый»

Given, for instance, the specification or an API documentation of the software system, black box tests are written against publicly exposed components, or rather their methods.

Основные термины (генерируются автоматически): ISO, IEEE, SOFTWARE, IEC, TESTING, TDD, USA, FOR, III, VIII.

Skolkovo: dream or reality? | Статья в сборнике международной...

The Skolkovo is a planned high technology business area that lies near the village of Skolkovo in the environs of Moscow, 2 km far from it, on Skolkovsky highway.

Основные термины (генерируются автоматически): USA, GDP, IBM, INFRA-M, ICP, HUMAN, HIGH-TECH, EQUIPMENT, AREA...

Foreign experience of formation of cluster systems | Статья в журнале...

System of state regulation of small business: the experience of USA and opportunities for Uzbekistan. USA, GDP, IBM...

The role of entrepreneurship in Uzbekistan: issues on doing business...

The role played by small business is determined by its capacity to quickly adapt to the constantly changing market environment, to produce a considerable portion of GDP, to ensure employment, to raise the population’s standard of living and to guarantee the social stability.

Computer technologies in criminalistic expertology: modern possibilities...

As a result of this meeting in Skolkovo such international companies as Intel, Nokia Siemens Networks, Microsoft, IBM, Google and Apple have been. USA, GDP, IBM, INFRA-M, ICP, HUMAN, HIGH-TECH, EQUIPMENT, AREA...

The Far East: Economic role and position for Russia in the...

Moreover, the United States would like to aim at the Asia — Pacific Region, which makes Russia aim at the East through enhancing

Trade relations between Vietnam and Russian federation. Russia is the 9th largest economy in the world with GDP in 2011 of 1,885 million USD (according to IMF).

Похожие статьи

Transfering of military technologies into civil production as one of the...

HPC, IBM, USA, API, TBD, SSD, KMIP, GDP, CRM, VIII. Ensuring hack wireless networks | Статья в журнале... Employees Cisco Scott Fluhrer, Itsik Mantin...

Использование чат-ботов в различных сферах повседневной жизни

Это приложение, запущенное со стороны пользователя и осуществляет отправку запросов к Telegram Bot API. Bot API представляет из себя HTTP-интерфейс для работы с ботами.

IBM’s Watson.

How data analysis technologies can help develop the purpose of...

IBM estimates that each day 2.5 quintillion bytes of data are created or replicated. That´s the equivalent of a million hard drives filling up with data every hour.

It is because of that we are going to quickly analyze the top ten of the most valuable companies (Market value) in the USA, China, and...

Software testing – overview | Статья в журнале «Молодой ученый»

Given, for instance, the specification or an API documentation of the software system, black box tests are written against publicly exposed components, or rather their methods.

Основные термины (генерируются автоматически): ISO, IEEE, SOFTWARE, IEC, TESTING, TDD, USA, FOR, III, VIII.

Skolkovo: dream or reality? | Статья в сборнике международной...

The Skolkovo is a planned high technology business area that lies near the village of Skolkovo in the environs of Moscow, 2 km far from it, on Skolkovsky highway.

Основные термины (генерируются автоматически): USA, GDP, IBM, INFRA-M, ICP, HUMAN, HIGH-TECH, EQUIPMENT, AREA...

Foreign experience of formation of cluster systems | Статья в журнале...

System of state regulation of small business: the experience of USA and opportunities for Uzbekistan. USA, GDP, IBM...

The role of entrepreneurship in Uzbekistan: issues on doing business...

The role played by small business is determined by its capacity to quickly adapt to the constantly changing market environment, to produce a considerable portion of GDP, to ensure employment, to raise the population’s standard of living and to guarantee the social stability.

Computer technologies in criminalistic expertology: modern possibilities...

As a result of this meeting in Skolkovo such international companies as Intel, Nokia Siemens Networks, Microsoft, IBM, Google and Apple have been. USA, GDP, IBM, INFRA-M, ICP, HUMAN, HIGH-TECH, EQUIPMENT, AREA...

The Far East: Economic role and position for Russia in the...

Moreover, the United States would like to aim at the Asia — Pacific Region, which makes Russia aim at the East through enhancing

Trade relations between Vietnam and Russian federation. Russia is the 9th largest economy in the world with GDP in 2011 of 1,885 million USD (according to IMF).