This article describes the negative aspects of using artificial intelligence. Artificial intelligence is already influencing almost all areas of life. However, so far its use brings more harm to society than the promised benefits — and the problem here is not in the technology itself, but in its application. Policies are needed to change this.

Keywords : technology, artificial intelligence, automation, big data, inequality, social networks.

Artificial intelligence (AI) is often hailed as the most exciting technology of our era, promising to transform our economy, lives and opportunities. Some even see AI as a way to make rapid progress in creating “intelligent machines” that will soon surpass human skills in most areas. In the last decade, the development of AI has indeed been very successful, especially in the development of new methods for processing statistical information and machine learning, which make it possible to work with huge amounts of unstructured data. This has already influenced almost all areas of human activity: AI algorithms are now used by all online platforms and in various industries, from manufacturing and healthcare to finance and wholesale and retail trade.

The latest AI technologies, especially those based on the dominant paradigm of recognizing statistical patterns and processing big data today, are more likely to lead to negative social consequences than to bring the promised benefits. These effects can be seen in product markets, advertising and the labor market — in terms of inequality, wage restraint and job destruction. The use of AI also has broader social implications that affect the areas of social communications, political discourse and democracy. In all of these cases, the main problem is not AI technologies per se, but how data and its use are approached by leading companies, which have a huge influence on the direction in which these technologies develop.

The use of new technologies in other areas may cause even more harm to consumers. To begin with, online platforms can gain control over a huge amount of data because when they collect or buy information about some users, they also gain access to data about other users. These types of data externalities most often involve people sharing information about their friends and contacts, or sharing information that is related to information about other people in the same demographic group. Data externalities can cause too much information to be concentrated in certain companies, threaten privacy, and reduce consumer benefits. To make matters worse, companies can use their information advantage in terms of knowledge of consumer preferences to manipulate consumer behavior. Economic models are built on the assumption that consumer decisions are always fully rational and do not involve such manipulation. However, it is likely that consumers are not fully aware of how many new data collection and processing methods are being used to track and predict their behavior.

The basic idea of such manipulation was described by antitrust specialists Hanson and Kaysar (John Hanson and Douglas Kaysar of Harvard Law School) in Taking Behavioralism Seriously: Some Evidence of Market Manipulation, published in 1999 — Note «Econs»). They found that “if one accepts that individuals systematically behave irrationally, one assumes that others will exploit this behavior to their advantage.” Indeed, advertising has always contained an element of manipulation. But the tools AI uses can expand the extent of this manipulation [1].

There are already examples of such manipulation through AI. Thus, the chain hypermarket Target [in the USA] successfully identifies pregnant women among its customers and begins to bombard them with hidden advertising of products for children. Other companies, based on available data about customers, calculate the moments when they are in a bad mood and actively advertise products that people in this state tend to buy impulsively. Manipulation also occurs through platforms — for example, YouTube and Facebook use their algorithms to calculate which specific group a particular user belongs to, and offer him those videos and news that will interest him most. Another example, introduced in 2016, Just Walk Out allowed Amazon store shoppers to skip checking out their products. Artificial intelligence tracked goods taken from the shelf using cameras and sensors, and then automatically debited the required amount from customers’ accounts [2].

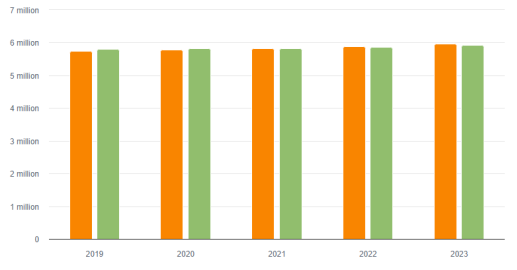

The impact of AI technologies on the labor market could be even more detrimental. Much evidence suggests that rising inequality in labor markets is driven in part by the rapid adoption of automated technologies that are displacing low- and medium-skilled workers. The negative impacts of automation on inequality were felt even before the advent of AI. The use of AI and the active use of data are expanding the automation of the labor market and may increase the trend towards rising inequality. The main threat from labor market automation is typical for large cities and industrialized regions, where this process can significantly affect 33–36 % of jobs. Analysts from the Center for Human Resources Development assessed the possibility of performing certain functions of groups of professions by automatic machines, robots or artificial intelligence, taking as a basis the National Classification of Occupations of the Republic of Kazakhstan in 2017, which is harmonized with the International Standard Classification of Occupations of 2008 and classifies occupations according to the level and specialization of the skills used reflecting the specifics of our economy. In total, the experts examined 423 groups of professions, collectively containing almost 3.4 thousand main functions performed. Based on current employment figures, of the nearly 8.7 million workers analyzed, 6.5 million workers, or 75 %, are extremely unlikely to be automated. Moderate probability of automation is possible for 1.5 million people, or 17 %. High probability for 686 thousand people, or 8 % [3].

Fig. 1. Dynamics of the working-age population

In principle, automation can improve economic efficiency. But there are reasons to expect that the result will be the opposite. The main one is the imperfection of the labor market, due to which the cost of labor for firms is higher than social opportunity costs. This incentivizes firms to actively implement automation so that rents remain with them rather than being passed on to workers, even though this practice reduces the benefits to society.

Other forms of AI use may have even more serious negative consequences. These include the use of algorithms and employee data to enhance monitoring of their performance. When workers receive a portion of the rents a firm earns (the terms of their contract may provide for this), closer monitoring of their activities can be beneficial to companies that want to recapture some of those rents. But such transfer of rent is socially ineffective — it is a costly and costly activity that does not contribute to increasing social benefits, but only redistributes it from one group of agents to another. AI-based automation can have other negative consequences. Although this process is unlikely to lead to mass unemployment in the near future (the effect of replacing workers in the labor market due to automated technologies has so far been modest), the displacement of living labor still carries many devastating consequences for society. Citizens with low work attachment may participate less in social and political life. But more importantly, automation is shifting the balance of power from labor to capital, and this could have far-reaching consequences for democratic institutions. In other words, to the extent that democratic politics depends on the distribution of power between labor and capital, balancing each other, automation can harm democracy because it neutralizes the importance of labor in the production process.

Take social media. The main reason for the problems I have listed is that such platforms try to maximize user engagement through all sorts of attractive “clicks”. This goal is rooted in their business model, which in turn focuses on monetizing data and traffic through advertising. This is possible due to the fact that this area is not regulated. The same is true of the negative effects of automation. AI can be used to improve worker productivity and create new tasks for them. And the fact that AI is used primarily for automation is a conscious choice. The choice of this direction of technology use is dictated by leading technology companies whose priorities and business models are focused on algorithmic automation.

In general, the direction in which AI is heading today is empowering corporations at the expense of workers and citizens, and often providing governments with additional tools for control and sometimes even repression (for example, new methods of Internet censorship and facial recognition software).

All of the above leads to a simple conclusion: The priority of the new policy should also be systematic regulation of the collection and use of data, the use of AI to manipulate user behavior, online communications and information exchange.

References:

- Искусственный интеллект в ритейле. — Текст: электронный // Retail: [сайт]. — URL: https://www.retail.ru/articles/iskusstvennyy-intellekt-v-riteyle/

- Компания Amazon со скандалом закрыла в США магазины по системе Just Walk Out. — Текст: электронный // Snob: [сайт]. — URL: https://snob.ru/news/kompaniia-amazon-so-skandalom-zakryla-v-ssha-magaziny-po-sisteme-just-walk-out/

- Влияние автоматизации и искусственного интеллекта на рынок труда в Казахстане оценили в ЦРТР. — Текст: электронный // gov.kz: [сайт]. — URL: https://www.gov.kz/memleket/entities/enbek/press/news/details/579455?lang=ru